This is the official website of the Trojan Detection Challenge, a NeurIPS 2022 competition. In this competition, we challenge you to detect and analyze Trojan attacks on deep neural networks that are designed to be difficult to detect. Neural Trojans are a growing concern for the security of ML systems, but little is known about the fundamental offense-defense balance of Trojan detection. Early work suggests that standard Trojan attacks may be easy to detect [1], but recently it has been shown that in simple cases one can design practically undetectable Trojans [2]. We invite you to help answer an important research question for deep neural networks: How hard is it to detect hidden functionality that is trying to stay hidden?

Prizes: There is a $50,000 prize pool. The first-place teams will also be invited to co-author a publication summarizing the competition results and will be invited to give a short talk at the competition workshop at NeurIPS 2022 (registration provided). Our current planned procedures for distributing the pool are here.

News

- February 4:The workshop recording is available here. The validation phase annotations are available here.

- November 27: The final leaderboards have been released.

- November 4: The competition has ended. For information on the upcoming competition workshop, see here.

- November 1: The final round of the competition has begun.

- October 16: The test phase for tracks in the primary round of the competition has begun.

- August 12: Updated the rules to clarify that using batch statistics of the validation set is allowed.

- July 27: We now have a mailing list for receiving updates and reminders through email: https://groups.google.com/g/tdc-updates. We will also continue to post updates here and on the competition pages.

- July 25: Updated the rules and the validation set for the detection track see here for details

- July 18: The validation phase for tracks in the primary round of the competition has begun.

- July 15: Due to server maintenance, the release of the training data and opening of evaluation servers for the validation sets is being moved forward three days to 7/18.

What are neural Trojans?

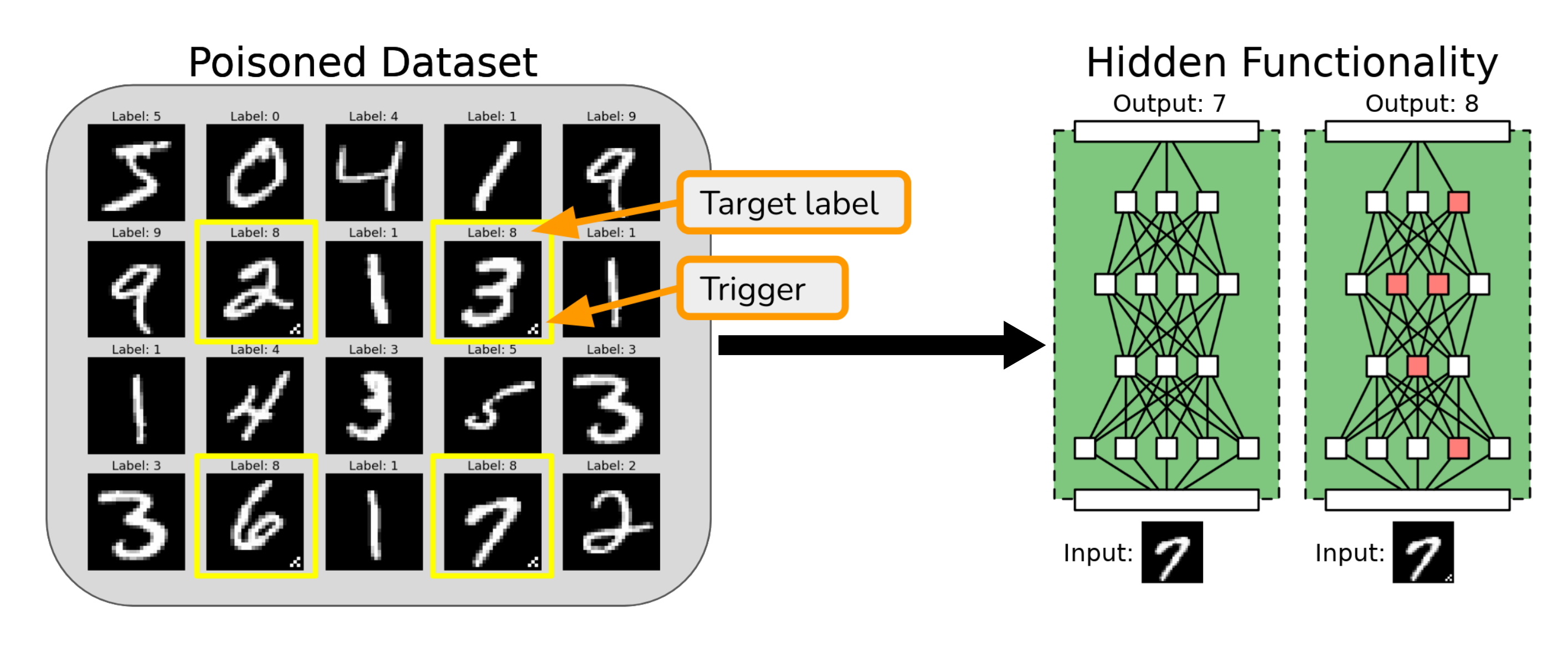

Researchers have shown that adversaries can insert hidden functionality into deep neural networks such that networks behave normally most of the time but abruptly change their behavior when triggered by the adversary. This is known as a neural Trojan attack. Neural Trojans can be implanted through a variety of attack vectors. One such attack vector is by poisoning the dataset. For example, in the figure below the adversary has surreptitiously poisoned the training set of a classifier so that when a certain trigger is present, the classifier changes its prediction to the target label.

Many different kinds of Trojan attacks exist, including attacks with nearly invisible triggers that are hard to identify through manual inspection of a training set. However, even though the trigger may be invisible, it may still be fairly easy to detect the presence of a Trojan with purpose-built detectors. This is known as the problem of Trojan detection. A second kind of attack vector is to release Trojaned networks on model sharing libraries, such as Hugging Face or PyTorch Hub, allowing for even greater control over the inserted Trojans. For this competition, we leverage this attack vector to insert Trojans that are designed to be hard to detect.

For more information on neural Trojans, please see the lecture materials here: https://course.mlsafety.org/calendar/#monitoring

Why participate?

Neural Trojans are an important issue in the security of machine learning systems, and Trojan detection is the first line of defense. Thus, it is important to know whether the attacker or defender has an advantage in Trojan detection. A competition is a good way to figure this out. We expect to learn novel Trojan detection/construction strategies and likely some unanticipated phenomena. Additionally, a highly refined understanding of Trojan detection probably requires being able to understand what's going on inside a network on some level, and this is one of the great questions of our time.

As AI systems become more capable, the risks posed by hidden functionality may grow substantially. Developing tools and insights for detecting hidden functionality in modern AI systems could therefore lay important groundwork for tackling future risks. In particular, future AIs could potentially engage in forms of deception—not out of malice, but because deception can help agents achieve their goals or receive approval from humans. Once deceptive AI systems obtain sufficient leverage, these systems could take a "treacherous turn" and bypass human control. Neural Trojans are the closest modern analogue to the risk of treacherous turns in future AI systems and thus provide a microcosm for studying treacherous turns source.

Overview

How hard is neural network Trojan detection? Participants will help answer this question in three main tracks:

- Trojan Detection Track: Given a dataset of Trojaned and clean networks spanning multiple data sources, build a Trojan detector that classifies a test set of networks with held-out labels (Trojan, clean). For more information, see here.

- Trojan Analysis Track: Given a dataset of Trojaned networks spanning multiple data sources, predict various properties of Trojaned networks on a test set with held-out labels. This track has two subtracks: (1) target label prediction, (2) trigger synthesis. For more information, see here.

- Evasive Trojans Track: Given a dataset of clean networks and a list of attack specifications, train a small set of Trojaned networks meeting the specifications and upload them to the evaluation server. The server will verify that the attack specifications are met, then train and evaluate a baseline Trojan detector using held-out clean networks and the submitted Trojaned networks. The task is to create Trojaned networks that are hard to detect. For more information, see here.

The competition has two rounds: In the primary round, participants will compete on the three main tracks. In the final round, the solution of the first-place team in the Evasive Trojans track will be used to train a new set of hard-to-detect Trojans, and participants will compete to detect these networks. For more information on the final round, see here.

Compute Credits: To enable broader participation, we are awarding $100 compute credit grants to student teams that would not otherwise be able to participate. For details on how to apply, see here.

Important Dates

- July 8: Registration opens on CodaLab

- July 17: Training data released for the primary round. Evaluation servers open for the validation sets

- October 15: Evaluation servers open for the test sets

- October 22: Final submissions for the primary round ---

- October 28: Evaluation data released for the final round.

- October 31: Final submissions for the final round

Rules

- Open Format: This is an open competition. All participants are encouraged to share their methods upon conclusion of the competition, and outstanding submissions will be highlighted in a joint publication. To be eligible for prizes, winning teams are required to share their methods, code, and models (at least with the organizers, although public releases are encouraged).

- Registration: Double registration is not allowed. We expect teams to self-certify that all team members are not part of a different team registered for the competition, and we will actively monitor for violation of this rule. Teams may participate in multiple tracks. Organizers are not allowed to participate in the competition or win prizes.

- Prize Distribution: Monetary prizes will be awarded to teams as specified in the Prizes page of the competition website. To avoid a possible unfair advantage, the first place team of the Evasive Trojans Track, whose models are used in the final round, will not be eligible for prizes in the final round. However, they may still participate in the leaderboard.

- Training Data: Teams may only submit results of models trained on the provided training set of Trojaned and clean networks. An exception to this is that teams may use batch statistics of networks in the validation/test set to improve detection (e.g., clustering, pseudo-labeling). Training additional networks from scratch is not allowed, as it gives teams with more compute an unfair advantage. We expect teams to self-certify that they do not train additional training networks.

- Detection Methods: Augmentation of the provided dataset of neural networks is allowed as long as it does not involve training additional networks from scratch. Using inputs from the data sources (e.g., MNIST, CIFAR-10, etc.) is allowed. The use of features that are clearly loopholes is not allowed (e.g., differences in Python classes, batch norm num_batches_tracked statistics, or other discrepancies that would be easy to patch but may have been overlooked). As this is a new format of competition, we may not anticipate all loopholes and we encourage participants to alert us to their existence. Legitimate features that do not constitute loopholes include all features derived from the trained parameters of the networks.

- Rule breaking may result in disqualification, and significant rule breaking will result in an ineligibility for prizes.

These rules are an initial set, and we require participants to consent to a change of rules if there is an urgent need during registration. If a situation should arise that was not anticipated, we will implement a fair solution, ideally using consensus of participants.

Organizers

Contact: tdc-organizers@googlegroups.com

To receive updates and reminders through email, join the tdc-updates google group: https://groups.google.com/g/tdc-updates. Updates will also be posted to the website and competition pages.

We are kindly sponsored by Open Philanthropy.

1: "ABS: Scanning Neural Networks for Back-doors by Artificial Brain Stimulation". Liu et al.

2: "Planting Undetectable Backdoors in Machine Learning Models". Goldwasser et al.